To everyone who put “a new commercial singing synthesizer” on their 2024 vocal synth bingo cards: this one is for you! Today, after almost two years of extensive research and development, we can finally share the first snippets of Mikoto Studio. We went through many design iterations and held regular meetings with our test users and various industry partners. Now, after ironing out the last details, let’s take a look at what we have so carefully put together.

We are ExpressiveLabs, developers of Mikoto Studio. We’re a young startup specializing in music technology, founded by Music Technology students from HKU (University of the Arts Utrecht, the Netherlands) together with creative minds from around the vocal synth community. You can learn more about our company and people on our website.

The case for Mikoto Studio

My name is Layetri, founder and lead developer at ExpressiveLabs. I created Mikoto Studio to fulfill my own needs and provide me with the functionality I found missing in other singing synthesizers. I’ve been a user of commercial singing synthesis products since 2015, but I always felt like these products were missing something. Wanting to switch to a more open solution such as UTAU, however, never worked out for me either. I found the interface confusing, couldn’t figure out how to get it to produce sound, and finding voicebanks was a confusing maze for me (what does VCVVC even mean). From this set of problems, my wish to create something simple and intuitive, yet open was born. I wanted to create a singing synthesizer that fulfills my needs in five key areas:

- Openness

A program that can be extended and is easy to configure the way I want it. Think about this in terms of user-created voicebanks, a plugin ecosystem, or the possibility of hacking around to get more out of the software. - Accessibility

The software has to be easy to understand. Although I’ve been lucky to receive music education from a very young age, many people wanting to use singing synthesizers don’t have this luxury. That’s why, to create a synthesizer that anyone can use, it needs to offer a clear and consistent design that is developed in collaboration with the end user. - Affordability

I believe affordability comes in two forms. First of all, I want a singing synthesizer that is priced in a way that fits my budget. But there’s more to affordability than just the price of the software itself: I also want it to run on any hardware I can reasonably expect to come across. I don’t want to depend on powerful computers or expensive audio gear. - Expressiveness

As an artist, it’s important to me that I can fully control what the software produces. In some cases this might mean tweaking individual characteristics of the sound, in others it’s enough to have a few macro-parameters that I can quickly adjust. Having the option to do both is vital for my creative process. - Integration

One of my biggest annoyances in existing vocal synths is that they seem to almost universally exist completely outside of the regular process of composing and producing songs. To maintain my workflow and not get distracted, I would like to be able to perform most of the process in the same place.

Not all of these requirements have been fulfilled yet, but the design of Mikoto Studio outlines features and plans in all these categories. What we’ll show you today is not everything that is being worked on, but we limit ourselves to features that are completely or mostly implemented. Please look forward to updates and announcements as development continues, because there are a lot of cool things that aren’t ready to be shared just yet!

Where do we stand?

Over the past two years, Mikoto Studio has developed from a vague concept to a full-fledged singing synthesizer with a small but dedicated community around it. The team behind it has grown a lot as well. While it started as just myself (and a few classmates who helped with certain tasks), we are now a team of over 20 people in size. Among us are developers, AI engineers, designers, artists, and singers. Most of us have a background in music as a performing artist or composer, and everyone is dedicated to using their background to create a better singing synthesizer for all.

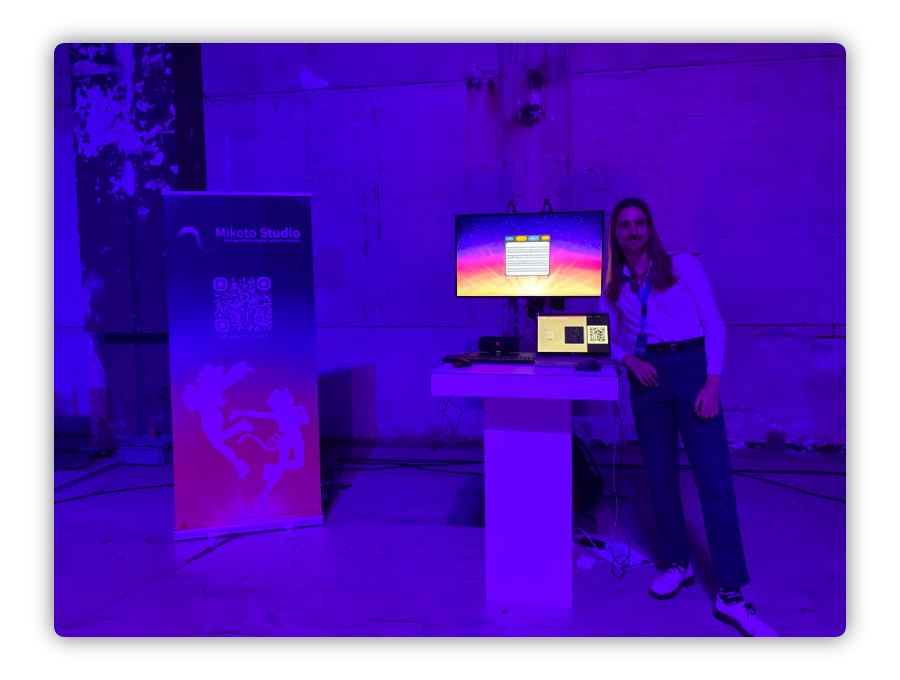

Besides a growing development team, we have also brought together a diverse group of testers. This group ranges from vocal synth veterans to visual artists, from experienced musicians to passionate fans. With their help, we have been able to finetune Mikoto’s design and functionality to strike the perfect balance between our core values and the wishes of the community. Apart from our testers, we have regularly presented our developments to people from outside the vocal synth community. This includes presentations to fellow Music Technology students and demonstrations at public university events. Additionally, we were present at the Night of the Nerds, an event for high school students interested in pursuing a career in STEAM. We used these events to test our designs in the real world, with a focus on approachability and ease of use.

Today we would like to share Mikoto Studio’s user interface design with you! Although some features are yet to be implemented, the design language is finalized and the most important components are all there. Please take a look at what we have created, and allow us to explain the vision behind it.

Building bricks

While we will dive deeper into the decisions and considerations that went into creating Mikoto’s user interface in another blog post, we would like to talk a little about how this design came to be. From the beginning, we focused our design on several design philosophies:

- Related functionality is grouped together visually

Want to change how the voice sounds? You can do so in the “voice” panel! Looking to change the look of your Editor grid? The settings are right there in the Editor, and they give you direct visual feedback. We took extra care to make sure that all features are where you would expect them to be. - There is no hidden functionality

Tying into the visual grouping, we wanted to make sure that everything you need is either always visible or is at most one click away. Most functionality from the menus is also available elsewhere, and vice versa. This gives you the flexibility to work however you want. - Using color as a guide

To make a complex interface like Mikoto’s easier to understand, we use accent colors to help you navigate the software. For example, when working in the Editor, all functions related to the current clip will change color accordingly.

With these considerations in mind, let’s talk about the building blocks that make up the Mikoto Studio interface: the Arranger, the Editor, and the Enhancer.

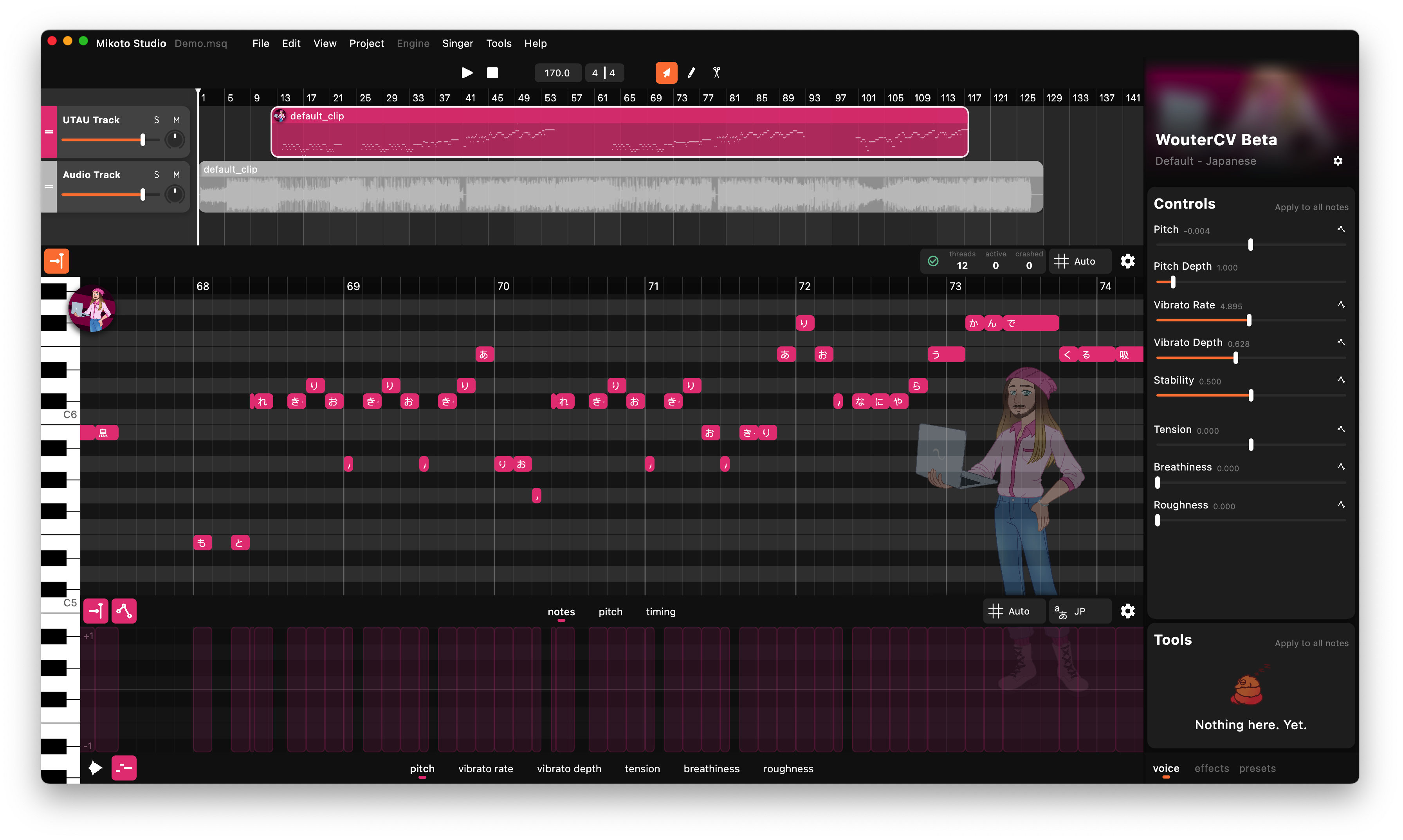

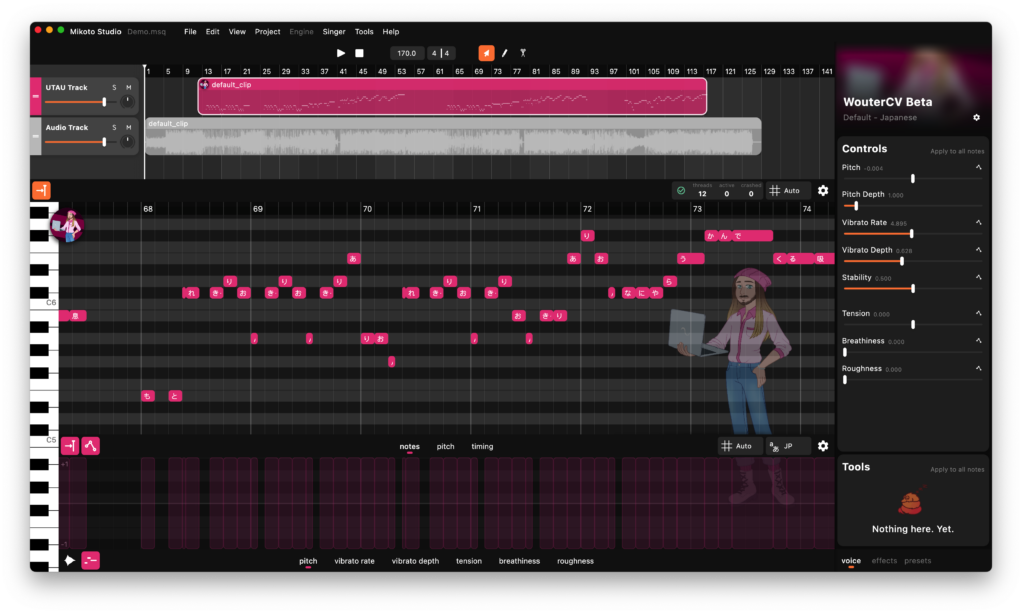

The Arranger

Mikoto’s Arranger provides a bird’s-eye view of your project. Manage tracks and clips to compose and arrange your songs, group tracks together to allow for easy mixing*, and add various types of automation for things like volume and panning*. Clips can be split, joined together, duplicated, and have various other editing operations applied to them.

*Not (fully) implemented yet.

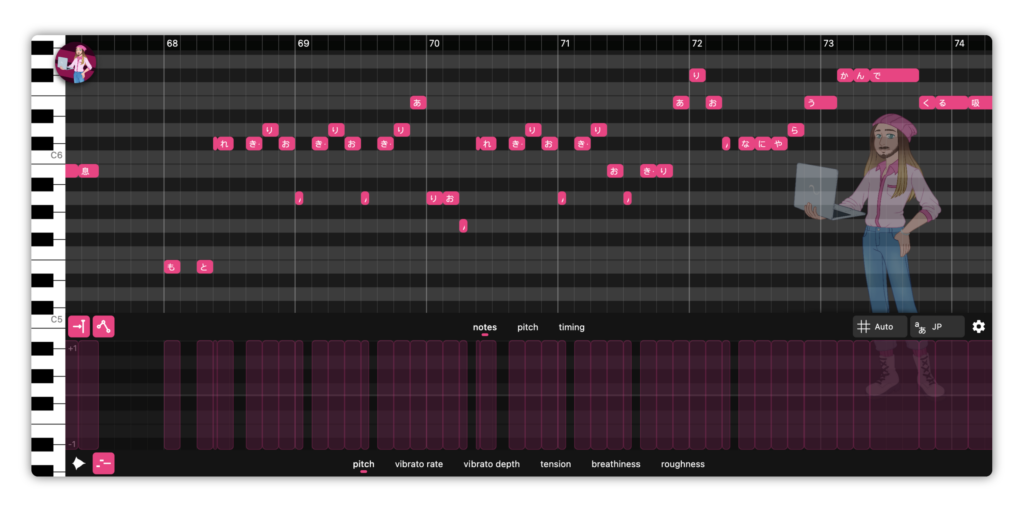

The Editor

We designed the Editor around a simple concept: while editing or tuning your vocals, you should never have to leave your workflow. Everything you need is right there and you can access the necessary features without the need to stop what you’re doing. Settings for the grid and various language options can be accessed through simple slide-up cards at the bottom of the screen. Visibility toggles for various parts of the interface are also provided and easily accessible.

The Editor features an intelligent grid snapping feature, which was carefully tuned to respond just the right way. Seamless automatic grid scaling and smart playhead following are just some of the functionalities that come together to form a cohesive and intuitive editing experience.

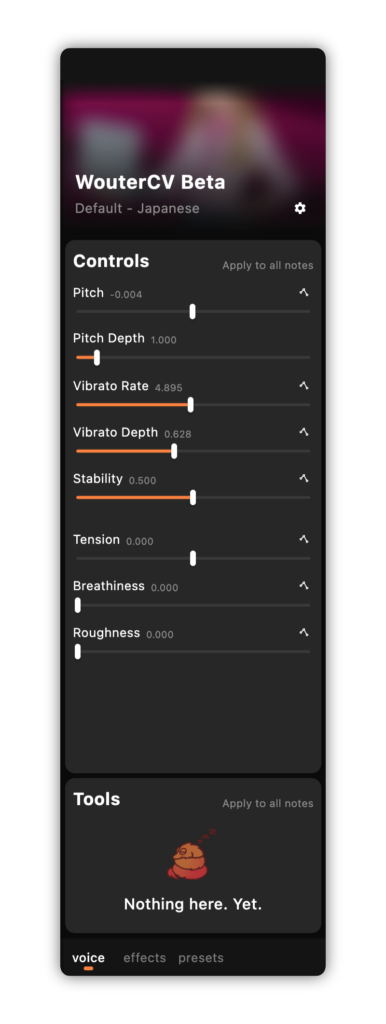

The Enhancer

This is where you’ll find everything you need to make your vocal tracks pop. Think of controls to easily change the sound of the voicebank and various tools to help you arrange and tune. A wide variety of parameters are available for you to tweak, with more being researched and developed as we speak.

Besides these voice controls, the Enhancer offers a collection of audio effects and parameter presets for you to use! Quickly prototype a composition in Mikoto Studio, or mix and export a demo without the need for additional software – the possibilities are endless.

Engines and Singers

Mikoto Studio packs two synthesis backends into one app. While future blog posts will go more into detail about how both of them work, we’ll give you a quick rundown of the basics here. Meet NEST and CHIRP, the two engines that form the beating heart of Mikoto.

NEST

NEST (New Expressive Singing Technology) is Mikoto’s proprietary AI engine. Using new technology and innovative research, we are developing a fast and lightweight neural synthesis engine for use with proprietary voicebanks. The NEST lineup already consists of several virtual singers, some of whom you’ll get to catch a first glimpse of very soon!

It’s important to note that Mikoto Studio will not support community-made AI voicebanks, due to various ethical and legal concerns. Find out more about the ethics of our products here.

CHIRP

The second engine, CHIRP, is a fast concatenative engine that brings full plug-and-play support for UTAU voicebanks. Simply drop your voicebank into Mikoto or select an existing voicebank folder, and CHIRP does the rest. We are taking extra care to make sure that this system is compatible with voicebanks of all shapes and sizes.

What’s next

Mikoto Studio is far from finished. Over the following weeks, we will make several announcements and reveal more information about what exactly it is that we’ve been up to. Most of these will take place on this blog, but we’ll also post links and updates on our Twitter. If you want to stay up to date, you can sign up for the Mikoto Studio waitlist! This will also grant you access to our community Discord server, where the development team is very active and regularly posts updates. In the near future, we’ll publish deep-dive blog posts on various topics related to the design and development of Mikoto. These topics include:

- How we switched from the industry-standard JUCE framework to a modern tech stack using Rust and Flutter.

- How we designed the Mikoto Studio user interface and what decisions we made in the process.

- How we obtain the data we train our AI models on, and how we make sure this data collection happens ethically.

- How we developed our upcoming singer characters, Sayaka and Jinshi, to align with the needs and wishes of the vocal synth community.

In the meantime, development continues. We are committed to bringing Mikoto Studio to market in 2024, but there’s a lot of work that still needs to be done. From now on, however, we will keep you updated on how the project progresses!

Thank you for taking the time to read this article. We look forward to being able to share more information in the future, and we hope you’ll join us on our journey.